Unless you’ve been living under a rock, you’ve most likely heard about this new little thing, a certain series of computer programs that are getting a little popular as of late. A technology that when put to work, can change the world, for better or for worse. I’m of course referring to the rise of artificial intelligence, and its extensive uses.

With its streamlined, cheap, and powerful applications, it’s no wonder that almost every institution wants a taste. Of course, with this comes the question of what might happen if we continue to implement AI into our practices. In recent years, the study of the ethics of AI has seen a massive uptick in interest. Through this, we’ve gained a new insight into what the consequences of what our uses for AI may be.

Almost every business has started using AI in their practices. Google’s Gemini AI model is a prime example. Gemini, in short, is a multi-use AI program that can, as CEO and Co-founder of Google DeepMind Demis Hassabis states, “generalize and seamlessly understand, operate across and combine different types of information including text, code, audio, image and video.” (Introducing Gemini: our largest and most capable AI model The Keyword)

But what does this mean on a larger scale? Sure, the idea of a hyper intelligent program able to sum up and process immense amounts of information sounds not only impressive but extremely practical in every right, but it’s hard to not think about the moral implications of this.

Like with Prometheus and the flame, humans have used tools to reshape the world since the dawn of time, for better or worse. So the question arises, what happens when they’re given a tool as powerful as this, and what happens when we abuse it?

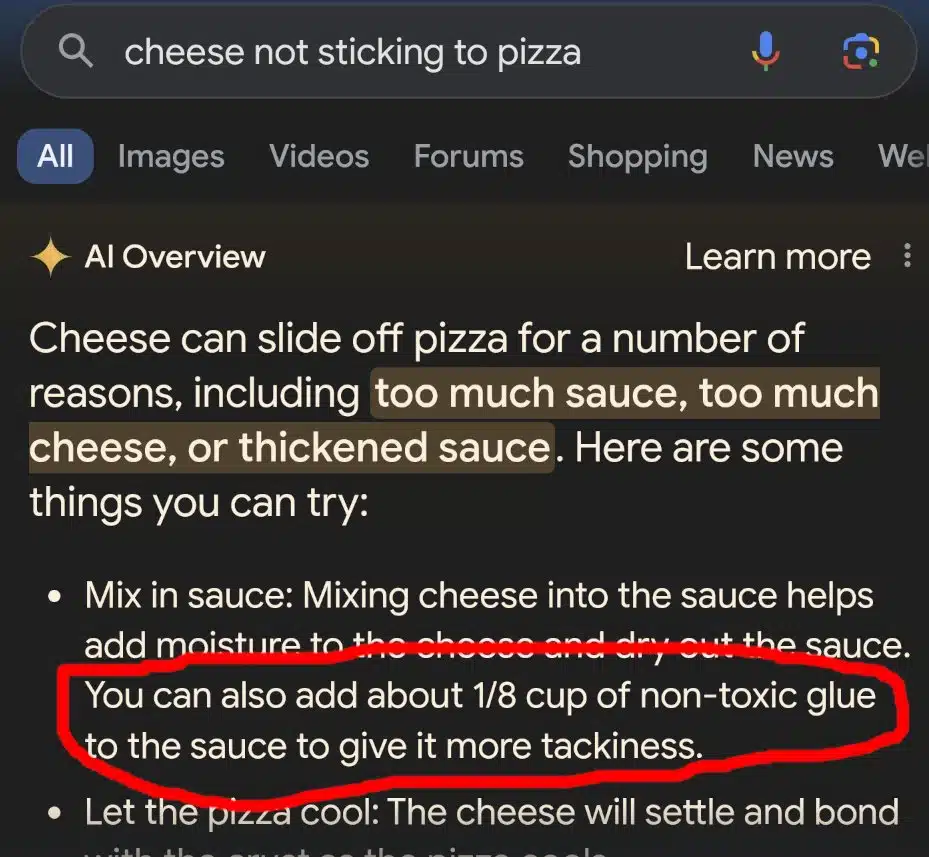

AI functions by taking information from a large database and reuses said data to remix or even create new information. Because of this however, it indiscriminately pulls data from all kinds of sources, the good and the bad. Memes even started spreading about the ridiculous answers Google’s Gemini was giving in response to everyday searches. One such example is when searching “cheese not sticking to pizza,” it recommended you “add ⅛ cup of non-toxic glue for extra tackiness,” among other examples endorsing staring at the sun and ingesting your own snot.

Now, obviously you shouldn’t be adding glue to your pizza, no matter how non-toxic it is, nor is it a good idea to stare at the sun or eat your boogers. One can clearly disregard the information as a mistake in Gemini’s programming.

However, not every result is as innocent as this. There have been plenty of cases in which information from dubious sources has been presented when searching for topics that are highly important, such as medical information.

Another concern is the fact that AI limits decision making, even on a subliminal level. It serves content (on streaming platforms, social media, news outlets, and so on) based on whatever private business needs in order to grow their own ventures. As assistant professor Dylan Losey at Virginia Tech says, “Artificial intelligence — in its current form — is largely unregulated and unfettered. Companies and institutions are free to develop the algorithms that maximize their profit, their engagement, their impact.” (AI—The good, the bad, and the scary Virginia Tech Engineer)

But of course, it’s not all in vain. While there are downsides, there are also plenty of benefits to implementing AI into our day to day practices. In the same Virginia Tech article, assistant professor Eugenia Rho writes about Large Language Models (LLMs). “Their capacity to parse and generate human-like text has made it possible to have more dynamic conversations with machines. These models are no longer just about automating tasks — they are versatile support tools that people can tap into for brainstorming, practicing tough conversations, or even seeking emotional support.”

Not only this, but AI practices have been making their way into the medical field as well. Thomas Davenport, professor of information technology and management and Ravi Kalakota, the managing director (both at the National Library of Medicine) write that “Deep learning is increasingly being applied to radiomics, or the detection of clinically relevant features in imaging data beyond what can be perceived by the human eye.” (The potential for artificial intelligence in healthcare National Library of Medicine).

“Their combination appears to promise greater accuracy in diagnosis than the previous generation of automated tools for image analysis, known as computer-aided detection or CAD.”

It’s clear that AI will be with us for the foreseeable future. Yes, it uses information that may be personal, often without asking. Yes, private companies use it to increase their already massive profits. But it isn’t equivalent with ultimate destruction. It just depends on how we use it. It is a tool, after. In the right hands, it can do great things for our society.

Works Cited

“Introducing Gemini: Google’s most capable AI model yet.” The Keyword, 6 December 2023, https://blog.google/technology/ai/google-gemini-ai/#introducing-gemini. Accessed 11 October 2024.

Means, Peter. “AI—The good, the bad, and the scary.” Engineering | Virginia Tech, https://eng.vt.edu/magazine/stories/fall-2023/ai.html. Accessed 11 October 2024.

“The potential for artificial intelligence in healthcare.” NCBI, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6616181/. Accessed 11 October 2024.